In the world of genomics research, every data point carries the potential to unlock the secrets of life itself. However, as genomics datasets grow in size and complexity, so do the challenges of managing the associated disk space. This is a story of a recent journey that began with a simple task: using the Integrative Genomics Viewer (IGV) to inspect many signal tracks. Little did I know that this journey would lead to a deep dive into the enigmatic realm of Linux file handles and the secrets they held.

The Enigmatic Message

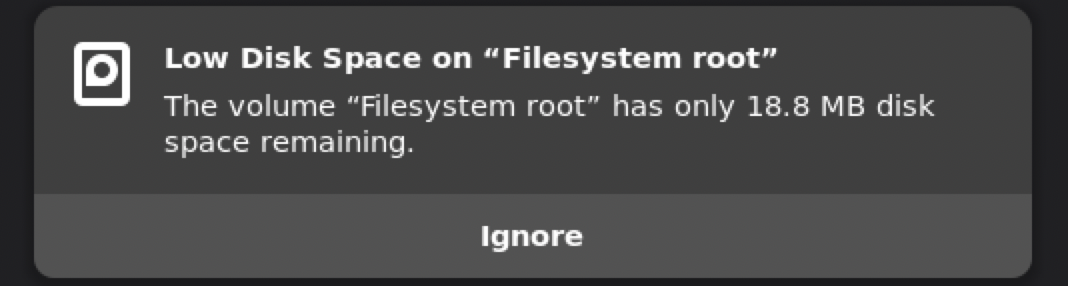

The story unfolds in the midst of my genomics analysis. I was inspecting numerous signal tracks with IGV. My workflow consisted of working in batched samples—inspect, analyze, delete. As I diligently removed the tracks from IGV and deleted the corresponding files from the filesystem, all seemed well. That was until an unexpected message disrupted my rhythm: “Root disk full.”

The Perplexing Discrepancy

The message left me bewildered. How could the root disk be full when I had meticulously deleted the files associated with the inspected signal tracks? I first checked the situation with the df command and confirmed that the system still believed it was running out of space.

1 | df -h |

2

3

4

tmpfs 50G 3.0M 50G 1% /run

/dev/sda1 58G 58G 455M 100% /

...

Determined to solve this mystery, I turned to the du command, the venerable tool for disk usage analysis. Yet, to my astonishment, du reported a different story. It indicated that the disk had ample space, with only 24G being used.

1 | sudo du -h --max-depth=1 / |

2

3

4

5

6

7

5.4G /home

7.1M /tmp

4.9G /snap

5.1G /var

...

24G .

The Unyielding File Handles

The perplexing discrepancy between the reported disk usage and the du output left me scratching my head. What could possibly be holding onto that disk space? I couldn’t help but wonder if I had missed something when running the du command. But no, I had executed the command on the entire root directory with all the privileges of an admin. So, what other mysterious forces could be at play?

A crucial revelation came to light as I delved deeper into the mystery. There may be files that were still open despite being deleted. The question now was: how do I unearth these orphaned files and identify the processes holding them hostage?

To answer this question, I turned to the ‘lsof’ command. Specifically, I used the following command to search for orphaned files and the processes that kept them open:

1 | lsof +aL1 / |

The output was illuminating. A single Java process, with ID 455114, was responsible for holding onto all the orphaned files, each contributing to the discrepancy in disk space usage. This was evident from the SIZE/OFF column, which displayed substantial figures.

2

3

4

5

6

java 455114 li 118r REG 8,1 624602003 0 1552486 /home/li/.local/share/Trash/expunged/751520316 (deleted)

java 455114 li 119r REG 8,1 1180123471 0 1552300 /home/li/.local/share/Trash/expunged/2560126835 (deleted)

java 455114 li 129r REG 8,1 225277230 0 1578319 /home/li/.local/share/Trash/expunged/2423833192 (deleted)

...

java 455114 li 221r REG 8,1 2846628790 0 1582420 /home/li/.local/share/Trash/expunged/2658608637 (deleted)

The Pursuit of Resolution

Armed with this newfound knowledge, I was determined to catch the ghost occupying my disk space. The first step in this quest was to identify the program behind those tenacious file handles. I began my search by unmasking the process responsible for these file handles. To do this, I used the ps aux command with grep to filter the results based on the process ID (455114) I discovered earlier. The command looked like this:

1 | ps aux | grep 455114 |

The result was a revealing snapshot of the process:

Ah, the culprit was none other than IGV itself! Despite removing the tracks from IGV and deleting the corresponding files from the filesystem, IGV had clung onto the file handles, refusing to release them. The pieces of the puzzle were finally coming together, and a solution was now within reach.

Right after I terminated the IGV instance, a lot of disk space got released, and the problem was solved!

1 | df -h |

2

3

4

tmpfs 50G 3.0M 50G 1% /run

/dev/sda1 58G 18G 41G 31% /

...