Jupyter notebook/lab is a fantastic tool for data analysis. I used it a lot in my daily research. But one of my habits makes me suffered a lot: I keep updating the same notebook for a long time, and to make the notebook neat, I always delete some of the cells, which are “redundant”/“useless” for me at certain time points (or I just delete them by accident). So in many cases, I wondered whether I could add version control to notebooks, so that when something wrong happens, I can rollback to the status before specific events.

Start to secure your notebooks

The basic idea is to add a hook to Jupyter whenever it finishes saving a file (especially notebook), which will:

- generate a

gitrepo in the folder where the notebook locates (if there isn’t); - copy the notebook to the repo (and convert notebook to python script);

- add all changes to git;

- commit modifications.

Note: In many cases, we will focus in a short time and make a lot of modifications to notebooks. To avoid making too many commits, it would be better if we can randomly skip some modifications. In this post, let’s denote time span (in seconds) between last commit and current modification as $ts$, then the probability is $\min(1, \frac{ts}{3600})$ for the modified notebook to get committed.

Here are the steps to enable automatical backup (MUST install git first):

- Make sure you have a configuration file (

jupyter_notebook_config.py) for Jupyter Notebook or Jupyter Lab. By default, it’s located at~/.jupyter. If are not sure, you can runjupyter --config-dir, which will tell you the file locates. If you don’t have any configuration files, generate one withjupyter notebook --generate-config; - Add the following codes to the beginning of the configuration file:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76import os

from time import ctime, time

from random import choices

from subprocess import check_call, CalledProcessError

from shutil import copyfile

def post_save(model, os_path, contents_manager):

"""

This function will do the following jobs:

1. creating a new folder named vcs (version control system)

2. converting jupyter notebooks to both python scripts and html files

3. moving converted files to vcs folder

4. keeping tracks with these files via git

"""

if model['type'] != 'notebook':

return

logger = contents_manager.log

d, fname = os.path.split(os_path)

# skip new born notebooks

if fname.startswith("Untitled"):

return

vcs_d = os.path.join(d, "vcs")

if not os.path.exists(vcs_d):

logger.info("Creating vcs folder at %s" % d)

os.makedirs(vcs_d)

try:

check_call(['git', 'rev-parse'], cwd=vcs_d)

except CalledProcessError:

logger.info("Initiating git repo at %s" % d)

check_call(['git', 'init'], cwd=vcs_d)

def add_file_or_not(file_name, folder):

file_path = os.path.join(folder, file_name)

if os.path.exists(file_path):

ctime = os.path.getctime(file_path)

delta = time() - ctime

delta = delta if delta >= 0 else 0

# if file was modified in the past 1 hour,

# then the new modification got 30% chance

# to be saved

prob = delta / 3600

prob = prob if prob <= 1 else 1

save_or_not = choices((0, 1), weights=(1-prob, prob), k=1)[0]

if save_or_not:

return True

else:

return False

else:

return True

rfn, ext = os.path.splitext(fname)

# in case notebook is not a python-based one (R,...)

script_ext = ".py"

with open(os_path) as fh:

tmp = json.load(fh)

script_ext = tmp["metadata"]["language_info"]["file_extension"]

script_fn = rfn+script_ext

if add_file_or_not(script_fn, d):

check_call(['jupyter', 'nbconvert', '--to', 'script', fname], cwd=d)

os.replace(os.path.join(d, script_fn), os.path.join(d, "vcs", script_fn))

copyfile(os_path, os.path.join(d, "vcs", fname))

check_call(['git', 'add', script_fn, fname], cwd=vcs_d)

commit_msg = 'Autobackup for %s (%s)' % (fname, ctime())

try:

check_call(['git', 'commit', '-m', commit_msg], cwd=vcs_d)

except CalledProcessError:

pass

else:

logger.info("File too new to be traced.") - Search for

post_save_hookin the configuration file; uncomment this line (if you see a#at the begining of the line, remove it), and change it to:1

c.FileContentsManager.post_save_hook = post_save

- Restart Jupyter Lab or Notebook.

Restore from disasters

The easiest way to restore jupyter notebook from backups is add the vcs folder to a GUI for git (like Sourcetree) and export the version that you want to roll back to. But in case GUIs are not available, you can use the following commands.

Let’s assume the notebook is a.ipynb, and it locates at /foo/bar, then the git repo is located at /foo/bar/vcs, you can:

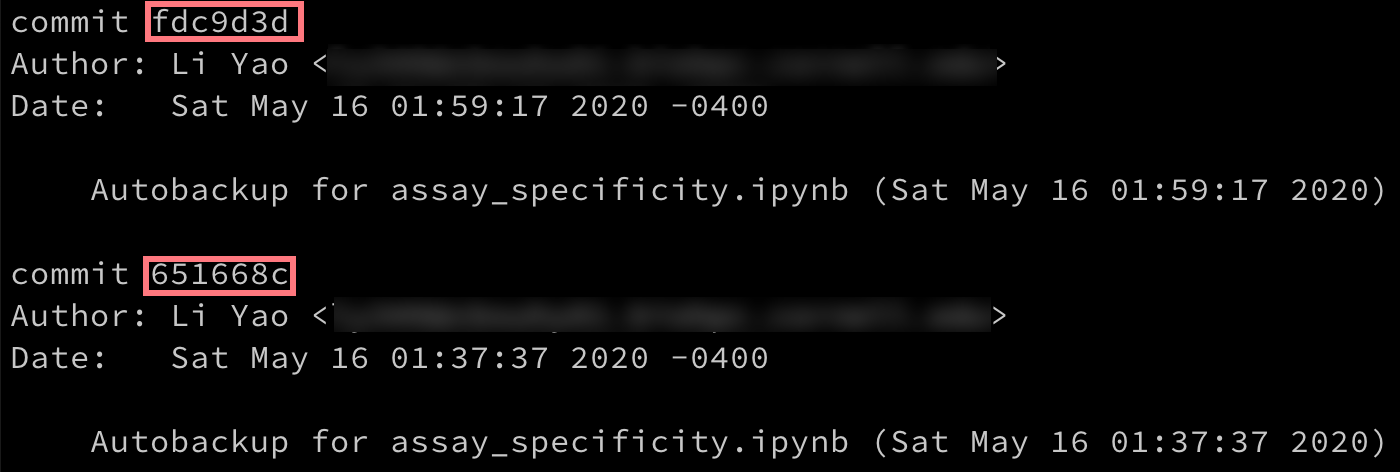

- use

git log --abbrev-committo see the history of modifications;

- choose the potential commit according to the time of submission, and record the commit id (red boxes above);

- use

git show COMMIT_ID:FILE_NAME > SAVE_TOto export the committed file to SAVE_TO. If you want to export the records jupyter, then in this case, you can replace FILE_NAME asa.ipynb; but if you just want to export python codes, then you can replace FILE_NAME witha.py.

A more comprehensive implementation

A better implementation of this function is to enable:

- backup/version control only in specific folders instead of everywhere;

- tracing other non-notebook files, like Python/R scripts;

- allowing pushing changes to remote repositories.

So by introducing the following two changes, the above three goals can be meet smoothly:

- Add a configuration file (

backup.conf) in the folder that you want to enable version control, and below is a template for this file1

2

3

4

5

6

7

8

9[repo]

local_repo_folder = vcs

remote_repo =

[backup]

backup_notebooks = no

backup_notebook_converted_scripts = yes

backup_by_filetypes = yes

backup_filetypes = .py|.R

The keys in the configuration file stand for:

local_repo_folder: name of the local folder that the git repo will be located;remote_repo: remote address for the repo, leave it as blank if you don’t want to push these changes to other servers;backup_notebooks: set it toyesto enable tracing Notebooks in.ipynbformat. NOTE: if your notebook contains a lot images or significant amount of outputs, then you may want to set it asnoto save some space;backup_notebook_converted_scripts: set it toyesto enable tracing Notebooks in.pyformat or any other format that the notebook is based-on;backup_by_filetypesandbackup_filetypes: set the first one to beyesto enable tracing other text files with extensions inbackup_filetypes. In this example, all files ending with.pyand.Rwill be traced (case-insensitive).

- Modify

post_save:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103import os

import json

from configparser import ConfigParser

from time import ctime, time

from random import choices

from subprocess import check_call, CalledProcessError

from shutil import copyfile

def post_save(model, os_path, contents_manager):

"""

This function will do the following jobs:

1. creating a new folder named vcs (version control system)

2. converting jupyter notebooks to both python scripts and html files

3. moving converted files to vcs folder

4. keeping tracks with these files via git

"""

d, fname = os.path.split(os_path)

conf_file = os.path.join(d, "backup.conf")

if not os.path.exists(conf_file):

return

config = ConfigParser()

config.optionxform = str

config.read(conf_file)

supported_files = set([ft.lower() for ft in config.get("backup", "backup_filetypes").split("|")])

logger = contents_manager.log

vcs_d = os.path.join(d, config.get("repo", "local_repo_folder"))

if not os.path.exists(vcs_d):

logger.info("Creating vcs folder at %s" % d)

os.makedirs(vcs_d)

repo_init = 0

try:

check_call(['git', 'rev-parse'], cwd=vcs_d)

except CalledProcessError:

logger.info("Initiating git repo at %s" % d)

check_call(['git', 'init'], cwd=vcs_d)

if config.get("repo", "remote_repo") != "":

check_call(['git', 'remote', 'add', 'origin', config.get("repo", "remote_repo")], cwd=vcs_d)

repo_init = 1

def add_file_or_not(file_name, folder):

file_path = os.path.join(folder, file_name)

if os.path.exists(file_path):

ctime = os.path.getctime(file_path)

delta = time() - ctime

delta = delta if delta >= 0 else 0

# if file was modified in the past 1 hour,

# then the new modification got 30% chance

# to be saved

prob = delta / 3600

prob = prob if prob <= 1 else 1

save_or_not = choices((0, 1), weights=(1-prob, prob), k=1)[0]

if save_or_not:

return True

else:

return False

else:

return True

rfn, ext = os.path.splitext(fname)

lext = ext.lower()

updated_files = []

if model["type"] == "notebook":

# skip new born notebooks

if fname.startswith("Untitled"):

return

# in case notebook is not a python-based one (R,...)

script_ext = ".py"

with open(os_path) as fh:

tmp = json.load(fh)

script_ext = tmp["metadata"]["language_info"]["file_extension"]

script_fn = rfn + script_ext

if add_file_or_not(script_fn, d):

if config.get("backup", "backup_notebooks") == "yes":

copyfile(os_path, os.path.join(vcs_d, fname))

updated_files.append(fname)

if config.get("backup", "backup_notebook_converted_scripts") == "yes":

check_call(['jupyter', 'nbconvert', '--to', 'script', fname], cwd=d)

os.replace(os.path.join(d, script_fn), os.path.join(vcs_d, script_fn))

updated_files.append(script_fn)

else:

logger.info("File too new to be traced.")

elif config.get("backup", "backup_by_filetypes") == "yes" and lext in supported_files:

copyfile(os_path, os.path.join(vcs_d, fname))

updated_files.append(fname)

if len(updated_files) > 0:

cmd = ['git', 'add']

cmd.extend(updated_files)

check_call(cmd, cwd=vcs_d)

commit_msg = 'Autobackup for %s (%s)' % (fname, ctime())

try:

check_call(['git', 'commit', '-m', commit_msg], cwd=vcs_d)

if config.get("repo", "remote_repo") != "":

if repo_init:

check_call(['git', 'push', '--set-upstream', 'origin', 'master'], cwd=vcs_d)

else:

check_call(['git', 'push'], cwd=vcs_d)

except CalledProcessError as e:

logger.info(e)